Moritz v3

| Moritz | Krystals 4.0 | Assistant Composer | Assistant Performer | ResidentSynth Host |

Introduction to version 3, January 2015

Top level user interface

Background

The future of music notation

A 21st century time paradigm

Summary

History (archived documentation)

Acknowledgments

Introduction to version 3, January 2015

Moritz was born in 2005, and was named after Max’s terrible twin (see

Max and Moritz).

Max

is a program which specializes in controlling information (sounds) at the MIDI event

level and below. Moritz deals with the MIDI event level and above (musical form).

MIDI events are the common interface (at the notehead symbol level) between these levels

of information.

Moritz is an open source Visual Studio solution, written in C#, and hosted at

GitHub.

Anyone is welcome to look at the code, fork the solution or its underlying ideas, and use them in any way they like.

Any help and/or cooperation would be much appreciated, of course.

The Assistant Performer has split off to become a separate program, written in HTML and Javascript, that runs in browsers on the web.

The code for the Assistant Composer was thoroughly overhauled during the autumn of 2014, and should now be much easier to understand. Many optimisations are still possible, but at least the worst of the spaghetti has disappeared. (My coding style is a bit pedestrian by present day C# standards, but maybe that's not such a bad thing.)

The biggest change, apart from cleaning up the code, is that scores can now contain both input and output chords. This enables much greater control over what happens when midi input information arrives during a live performance: Parallel processing can be used to enable a non-blocking, "advanced prepared piano" scenario. Single key presses can trigger either simple events or complex sequences of events, depending on how the links inside the score are organized. An example score can be viewed (but not yet played) here.

Another important change is in the way ornaments are composed in palettes. Ornament value strings are no longer related to krystals, but are now entered directly instead. This removes an unnecessary awkwardness, and should help create more interesting ornaments and ornament relations in future.

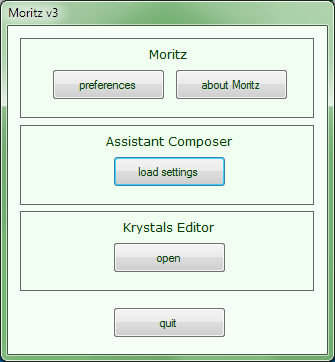

Top level user interface

Moritzí opening window is a simple entry point, from where the Assistant Composer or the Krystals Editor can be started:

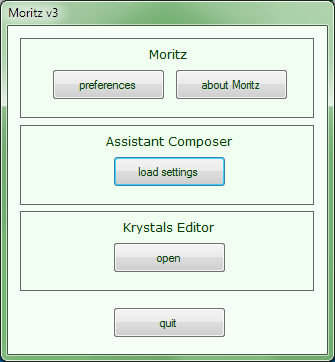

Preferences

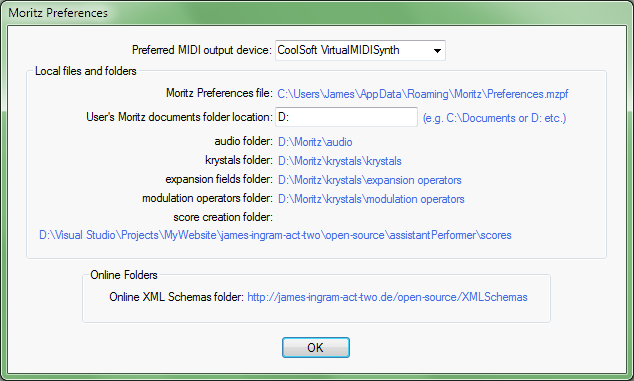

About

Background

Moritz' origins go back to the early 1980s, when I had been trying for a decade or so (as a professional copyist of Avant-Garde music) to

find some answers to the conceptual mess that had been plaguing music notation since the beginning of the century. I suddenly

understood that much of the confusion could be avoided by keeping the spatial and temporal domains of music notation strictly separate in

one's

mind.

I realized that all music notation (including standard 20th century music notation) is timeless writing

— ink smears on paper. It has no

necessary relation to clock time.

This meant that there was no reason for symbols to have to add up (in clock time) in bars,

and that tuplet symbols are unnecessary. I published these ideas, backed up by further arguments, in the

essay The

Notation of Time in 1985.

Tuplets are, in fact, a 19th century mistake. Earlier music doesn't use them, so why should we?

This solved the conceptual problems in notation being faced by the Avant Garde at the time, but did not solve the parallel, temporal problems of performance practice and rehearsal time. The Early Music revival, which was in full swing at the time, used recordings to develop traditions of performance practice, but this option was not available to composers trying to develop their own, specific performance practices.

Having a default performance embedded in a score, is a possible answer to this problem:

The SVG-MIDI score format clearly differentiates between spatial and temporal responsibilities by containing symbols

whose appearance (graphics) is independent of their meaning (embedded temporal information). The

temporal information in such scores defines a default performance — a performance that can be experienced and imitated.

It is simply a mistake to try to define a (temporal) default performance using (spatial) graphics (tuplets, metronome marks

etc.). The whole point of music notation is that it should be legible in real time, and be a reminder of something that

has been experienced beforehand. It does not have to be an exhaustive description of the symbol's meaning.

SVG-MIDI scores can contain a composer's original default

performance, but a different score having the same graphics could just as well contain a recording of a later performance, created

by a real performer.

I have been trying very hard to get other people to listen to these ideas since 1985. This has, however, proved to be a very difficult undertaking: Unfortunately it is not enough, even on the web, just to provide good, logical arguments showing that standard 20th century music notation contains mistakes and is unnecessarily complicated.

Karlheinz Stockhausen, whose principal copyist I had been since 1974, said (referring to a source that I have forgotten) that if you have an idea that is really new, then it takes a generation for the synapses in people's brains to grow together to catch up. He was himself unable to take these ideas on board, and simply ignored them. Less excusably, the academic world is, after about 30 years, still looking the other way.

"Getting inside the machine" to develop software libraries is a way to build on previous work, and to make progress, even without much help from anyone else. Feedback is very important for the development of any ideas, even if it is self-reflexive feedback. Talking to machines at least means that ideas have to be formulated precisely, and that there is no way to cheat. Also, formulating ideas very precisely often suggests ways to develop them.

Re-usable software libraries instantiate and express generalizations, but they need to be tested using concrete instances. For Moritz, the test cases are currently just my compositions (which are experimental music). If other composers want to write for the Assistant Performer, they will either have to use Moritz' classes, fork the code and develop some of their own, or write their own SVG-MIDI score generators from scratch. The file format is itself style-neutral.

I'm interested in both the generalizations and the test cases, but for me the generalizations are actually more important — That is the area that is especially dependent on cooperation. That's where agreement needs to be found. Where culture is. The days of Romantic heroes, pretending that they have all the answers, are long gone.

The future of music notation

The Assistant Composer/Performer project is part of an effort to re-enable writing

as a means of composing large-scale polyphonic processes. Music is not the only application.

Chord symbols, staves and beams evolved in music notation over several hundred years

to communicate a high density of polyphonic information on the page. Needing to

be readable in real time, they evolved to be as legible as possible, making

them good candidates for use in graphic user interfaces on computers.

That the symbols communicate a much higher information density than the space=time

notations currently used everywhere in today’s music software, means that

they ought to become a useful alternative in a large number of music applications.

But there are uses in any area that could benefit from being able to program multiple parallel threads. I can even imagine music notation being used as the basis for new kinds of programing language. (The symbols don't necessarily have to contain MIDI information).

Future uses of SVG-MIDI scores are not limited to simple music-minus-one played in private (think Mozartís Clarinet concerto). The scores can describe any kind of temporal process, including both passive recordings and processes which require live control. Performances can be cooperative, with several live performers on different computers. Output devices can include ordinary synthesizers, robot orchestras (or other teams of robots), lighting systems etc.

The Assistant Composer currently writes only a few of the more important annotations — score metadata (title, author etc.), bar numbers, staff names — but there is no reason why other annotations (such as verbal instructions, tuplets etc.) should not be added in a non-functional layer in the SVG. If tuplet annotations were present, many 19th and 20th century scores could be presented with familiar graphics, making it easier for performers to continue their existing traditions of performance practice.

A 21st century time paradigm

The simple, 19th century common-sense time paradigm was that people are like expressive metronomes. This dualism lead to the mistaken development of tuplet symbols in standard music notation, and also meant that 19th century music notation theory took no account of performance practice traditions. These failings eventually lead to the collapse of written music at the beginning of the 20th century, so I think it would be a good idea to try to describe a more modern version in order to avoid such problems for as long as possible in future.

Working on Moritz, and reading the lay scientific literature, leads me to think of time as follows:

The arrow of time is part of a brain strategy for dealing

with complexity. It is the prerequisite for perception (consciousness), because without

it we would suffer from information overload.

In the 21st century, the mathematical equations that describe

space-time

seem not to have a time-arrow. The arrow seems to be more closely related to consciousness than to bed-rock physical

reality.

Whatever else they are, brains are a part of the space-time manifold where the universe is looking at itself, so they can't see the whole picture. And they also need to simplify the information that they are perceiving (the percepts). I think of brains as being little bits of space-time that look at the universe by grabbing other little bits of space-time, separating them into space and time, calling them "here" and "now", and then using chunking to develop higher level structures for coping with the percepts.

Brains use chunking both in perceived space and perceived time. They also use it to create other, quasi-dimensions: We don't perceive the frequencies of colours or sounds, just their effect (greenness, blueness, stringy timbre, windy timbre etc.). Space, time, greenness, blueness, stringy timbre, windy timbre etc. don't exist at all at the physical, space-time level. The physical level is not perceived at all, and can only be described using mathematics. But, using the idea of chunking, the percepts are compatible with the equations.

For whatever reason, there is a smallest duration that can be perceived.

It used to be thought, rather vaguely, that

brains are analog devices that scan a time-continuum containing arbitrarily small instants. But is our perceived "now"

really infinitesimally

short? Does perceived time just appear continuous because we can't perceive the joins between the chunks of time we

are perceiving? At the physical

level,

there is no perceived time at all, only space-time. For me, brains deal with chunked time, and the chunks are not

infinitesimally small.

Moritz, following the Web MIDI API, measures time in increments of

1 millisecond (the DOMTimeStamp).

Once brains have begun to create time chunks from the space-time manifold, these are further grouped into

higher level symbols so that they are more easily comprehensible.

This can be

seen happening in the words we use for higher levels of musical structure.

We have words for timbres, notes, phrases, bars, movements, symphonies and so on even though these are impossible

to see in the

flat space=time diagrams used in current sound editing/playing software. These high level musical structures, especially

the

smaller ones, seem to be somehow accessible to us as if they were there

all at once. Time chunking seems to

work in a very similar way to the spatial chunking that happens in music notation (noteheads, chord symbols, beams,

bars, systems, staves etc.).

So perceived time does not have the simple, direct relation to a physical time-continuum that is pretended by standard

tuplets.

Musical time is not related to an ether-like, absolute, continuous, divisible time.

Note too, that tempi are at a much higher information level than timbre. Tempi, if any, in music are related to the sizes

of dancing human bodies. Tempi are fundamental to standard 20th century music notation, but they are not fundamental to musical

time. There is no perceived tempo at the lowest level of

time perception.

Perceived time is related to perceived events and memory. I think of short term memory as being closely related to the direct reading of a region in the space-time manifold, and longer term memory as working differently. However the different levels of memory work, I think that no memory of any kind would be possible without the brain's ability to create higher level symbols from more basic ones.

Performers learn to control perceived time in the context of a real, living tradition of performance practice

(which we perceive as being stored somehow in human memory). Max is about our interaction with physical machines, Moritz

is about linking those machines to human memory.

But musicians need only be concerned with how their memories work in practice.

Physicists and brain specialists can be left to discover how they work under the hood.

Summary

History (archived documentation)

Archived documentation for Moritz v1Acknowledgments